Hello there!

I am a Senior Research Scientist at NVIDIA and Lead of AI Agents Initiative. My mission is to build generally capable agents across physical worlds (robotics) and virtual worlds (games, simulation). I share insights about AI research & industry extensively on Twitter/X and LinkedIn. Welcome to follow me!

My research explores the bleeding edge of multimodal foundation models, reinforcement learning, computer vision, and large-scale systems. I obtained my Ph.D. degree at Stanford Vision Lab, advised by Prof. Fei-Fei Li. Previously, I interned at OpenAI (w/ Ilya Sutskever and Andrej Karpathy), Baidu AI Labs (w/ Andrew Ng and Dario Amodei), and MILA (w/ Yoshua Bengio). I graduated as the Valedictorian of Class 2016 and received the Illig Medal at Columbia University.

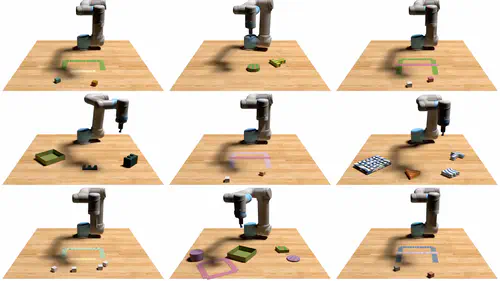

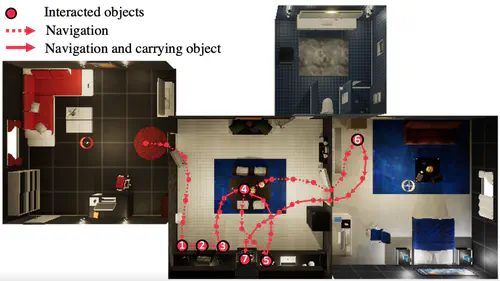

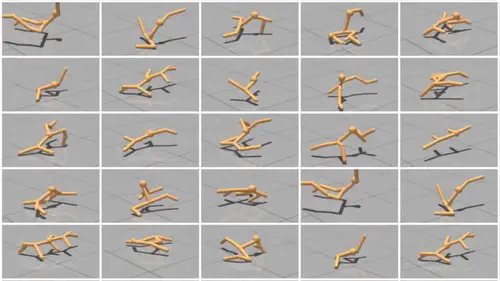

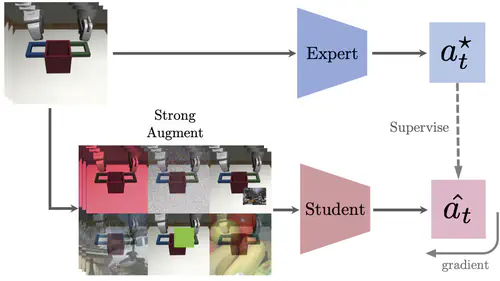

I spearheaded Voyager (the first AI agent that plays Minecraft proficiently and bootstraps its capabilities continuously), MineDojo (open-ended agent learning by watching 100,000s of Minecraft YouTube videos), Eureka (a 5-finger robot hand doing extremely dexterous tasks like pen spinning), and VIMA (one of the earliest multimodal foundation models for robot manipulation). MineDojo won the Outstanding Paper Award at NeurIPS 2022. My works have been widely featured in news media, such as New York Times, Forbes, MIT Technology Review, TechCrunch, The WIRED, VentureBeat, etc.

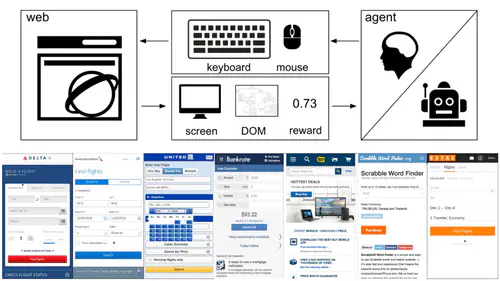

Fun fact: I was OpenAI’s very first intern in 2016. During that summer, I worked on World of Bits, an agent that perceives the web browser in pixels and outputs keyboard/mouse control. It was way before LLM became a thing at OpenAI. Good old times!

Featured

Research Highlights

Media

Coverage

Publications

Visit my Google Scholar page for a comprehensive listing!

Experience

- Conducting bleeding edge research on foundation models for general-purpose autonomous agents.

- Leading the MineDojo effort for open-ended agent learning in Minecraft.

- Mentoring interns on diverse research topics.

- Collaborating with universities: Stanford, Berkeley, Caltech, MIT, UW, etc.

- Doctoral advisor: Prof. Fei-Fei Li.

- Ph.D. Thesis “Training and Deploying Visual Agents at Scale”.

- Co-designed World of Bits, an open-domain platform for teaching AI to use the web browser. World of Bits was part of the OpenAI Universe initiative.

- Paper published at ICML 2017.

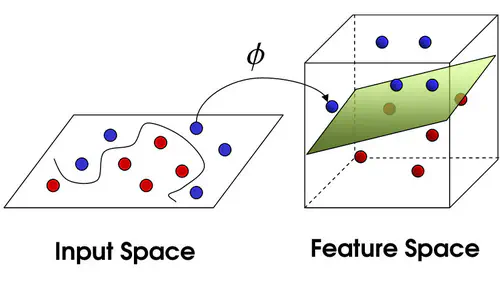

- Systematically analyzed and proposed novel variants of the Ladder Network, a strong semi-supervised deep learning technique.

- Mentored by Turing Award Laureate Yoshua Bengio.

- Paper published at ICML 2016.

- Co-developed DeepSpeech 2, a large-scale end-to-end system that achieved world-class performance on English and Chinese speech recognition.

- Mentored by Dario Amodei, Adam Coates, and Andrew Ng.

- Paper published at ICML 2016.

- DeepSpeech and derivative works have been featured in various media: MIT Technology Review, TechCrunch, Forbes, NPR, VentureBeats, etc.

- Columbia NLP Group, advised by Prof. Michael Collins. Studied kernel methods for speech recognition. Paper published in Journal of Machine Learning Research.

- Columbia Vision Lab, advised by Prof. Shree Nayar. Implemented a computer vision system in Matlab to infer astrophysics parameters from galactic images.

- Columbia CRIS Lab, advised by Prof. Venkat Venkatasubramanian. Developed ML and NLP techniques to automate ontology curation for pharmaceutical engineering. Paper published in Computers & Chemical Engineering.